Author: Zombi Istilasi

-

Kernel Trick Example

I’d like to demonstrate the kernel trick using a simple example here. The equations are taken from [1]. My motivation is to look from a more practical perspective. Inputs and Outputs Of course we have X as input and y as output. But I want to talk about inputs to the prediction equation to solve…

-

Precision vs. Recall

This is pretty explanatory. Here are some remarks on the meanings: Predictions specify whether the output is positive or negative Match between prediction and actual condition specify if the output is true or false Precision Precision is the measure of how we are successfully classifying the positives. More clearly, how much of the positive outputs…

-

Kotalı KYK interneti

Hikaye ve çözüm olarak 2 kısma ayırdım bu yazıyı. Zamanı olmayanlar Çözüm başlığına zıplayabilir. Kotaya takılmadan sınırsız KYK interneti kullanmak için basit bir yöntem. Denendi ve çalıştı: Denendi ancak SSH veya başka bir port bulunamadı: Hikaye Bu yazıda GSBWIFI isimli ağda nasıl sınırsız internete bağlandığımı yazmak istiyorum. Öncelikle bu ağa bağlandığımda internet erişimi yoktu ve…

-

Installing VMware and Kernel Modules for Fedora

When I installed vmware-player on my Fedora 33 with kernel 5.14.18-100.fc33.x86_64, I got this error after I tried starting virtual machine: To install the required modules, I followed this post and did: However, as I’m using secure boot, I got this error during installation: Checking the journalctl output: I signed the modules manually(using /usr/src/kernels/$(uname -r)/scripts/sign-file)…

-

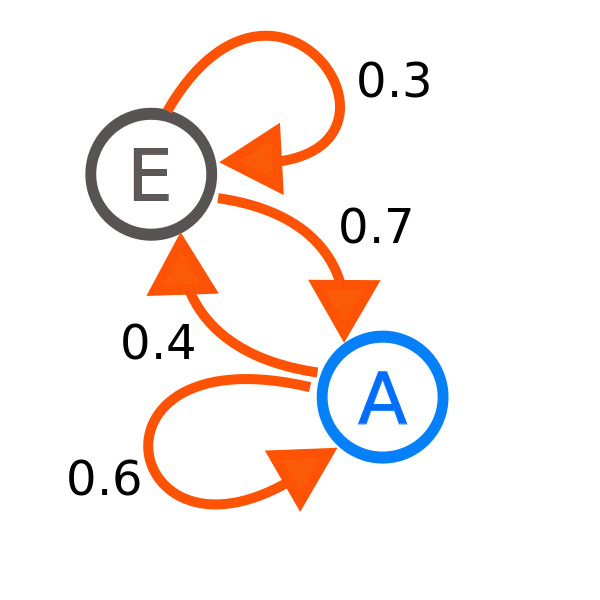

Markov Decision Processes

We start with a basic formula, expected value. It is the expected value when you follow some transition function with some start state . Eq. 1: Note that the initial state is included in the reward function . Bellman TheoremA policy is optimal if and only if it is greedy with respect to its induced…

-

Word2Vec

It is a mapping of words to the vector space. Words will be represented as vectors to be used in machine learning domain. We can utilize this transformation and treat the words as mathematical objects to extract the required information. Granted we have the embeddings, we can do operations easily. Now it comes how to…

-

Static Games Intro – Notes

Definitions 1. Pure Nash Equilibrium It is when there is a state in which no player can make better profit by changing their action guaranteed no one else will change unreasonably their choice (other players are logical players) 2. Mixed Nash Equilibrium It is when you don’t know the opponents movement, or it is a…

-

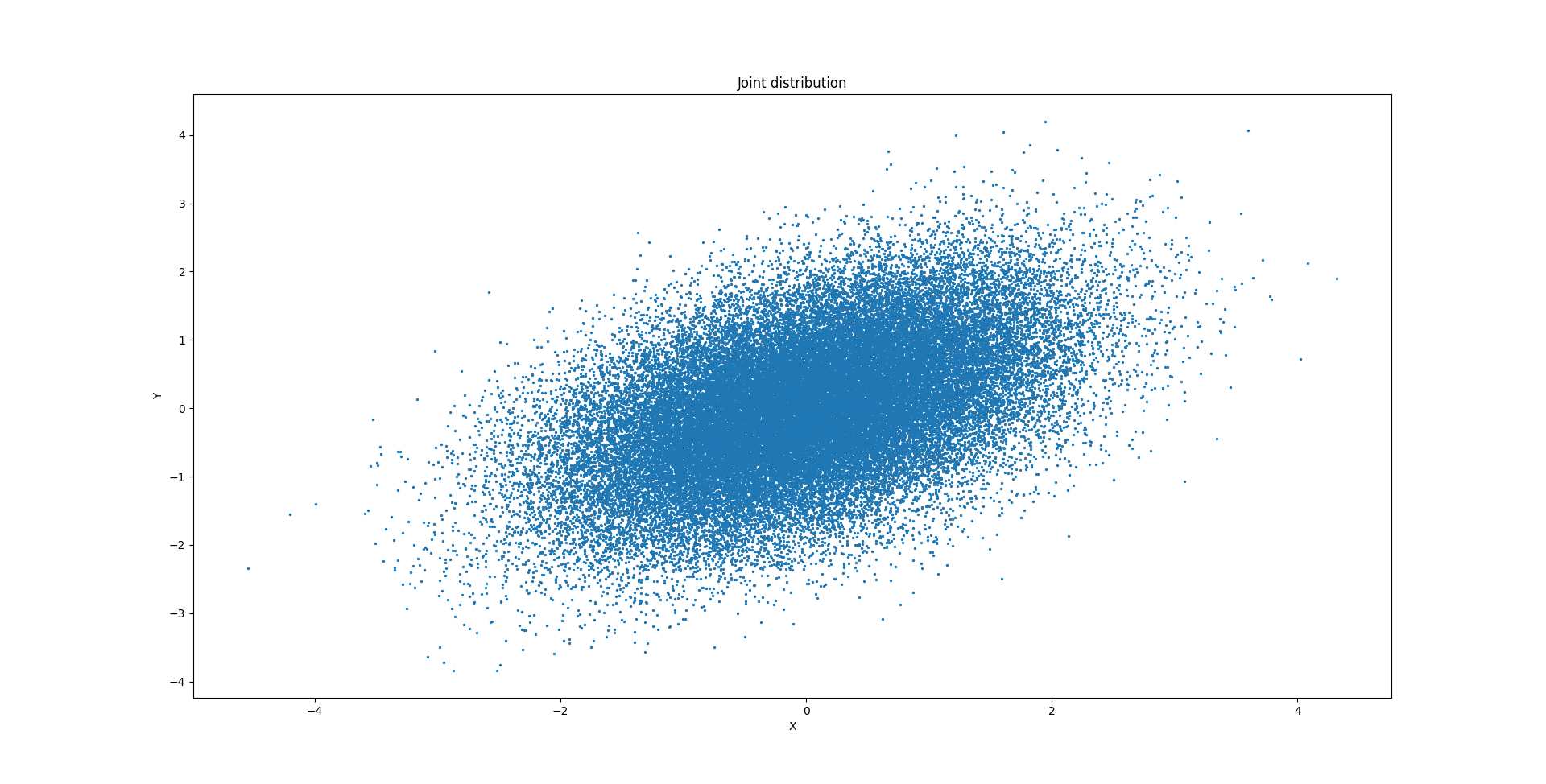

Gaussian Process

What is a Gaussian Process?A GP is simply a multivariate Gauss distribution. The idea is very simple; we have a multivariate Gauss distribution which includes both previous measurements(or train set) and targets(test set). Either previous measurement or target, I will call them dimensions. They are some dimensions in the Gaussian Process. Since the multivariate Guassian…

-

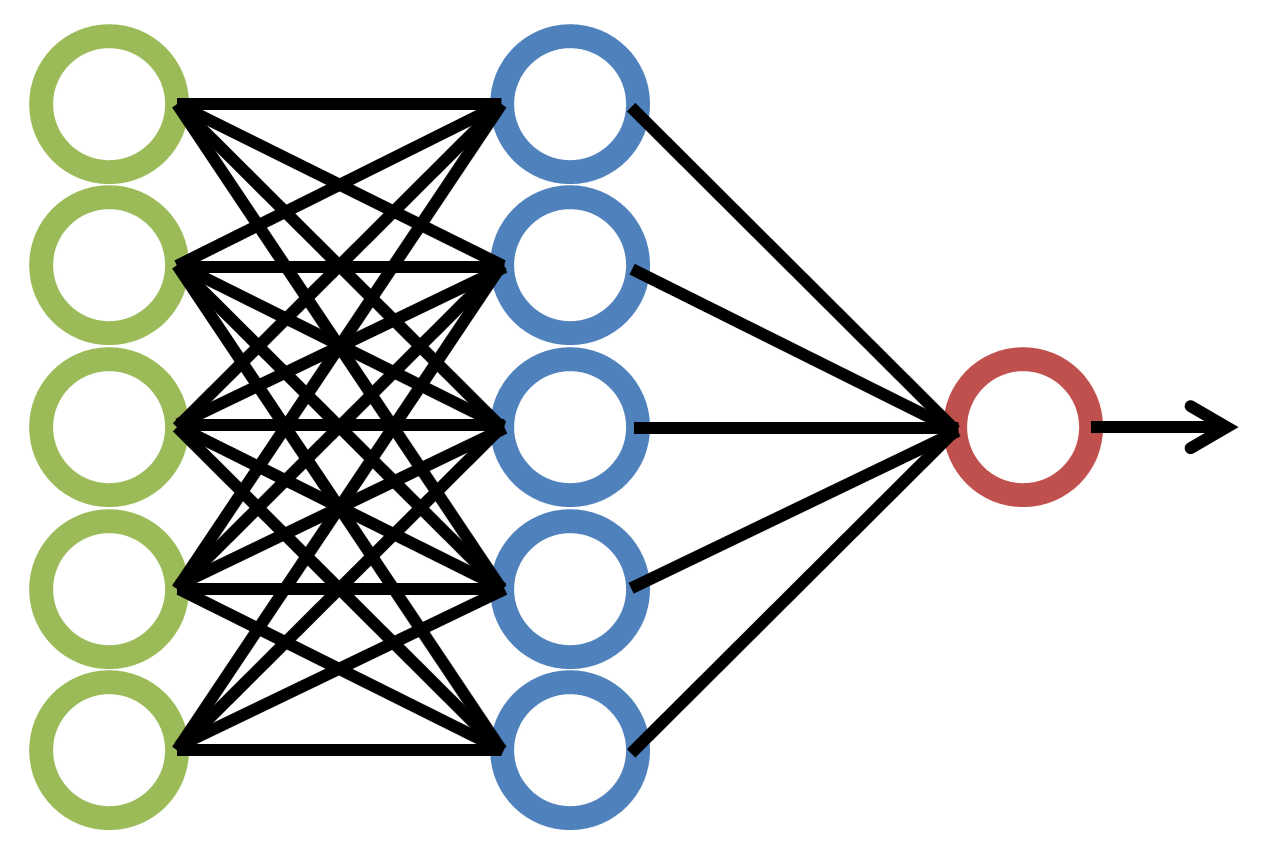

Bayesian Deep Learning

Definitions Epistemic uncertainty:Uncertainty due to model itself, the model doesn’t represent the data completely because we have lack of information (obviously we have a finite set of data).Aleatoric uncertainty:Uncertainty inside the data itself. I think of it as measurement error in sensors.Heteroscedastic noise:The noise is not independent of the input features. For example, in Hadron…

-

Markov Chain Monte Carlo Methods

Some notes for myself… Just points to remember, I skip the overview and note the details that makes me grasp the concepts, usually why questions. Notice they are mostly my understanding from the lectures, and not intended to be a reference; I just type my understanding for future reference for myself. What is ergodicity in…