Some notes for myself… Just points to remember, I skip the overview and note the details that makes me grasp the concepts, usually why questions.

Can we always find an equation to analytically minimize a convex function?

No. Not every convex function has an analytical solution for finding their maximum/minimum. An example would be Bayesian Logistic Regression.

Laplace Approximation

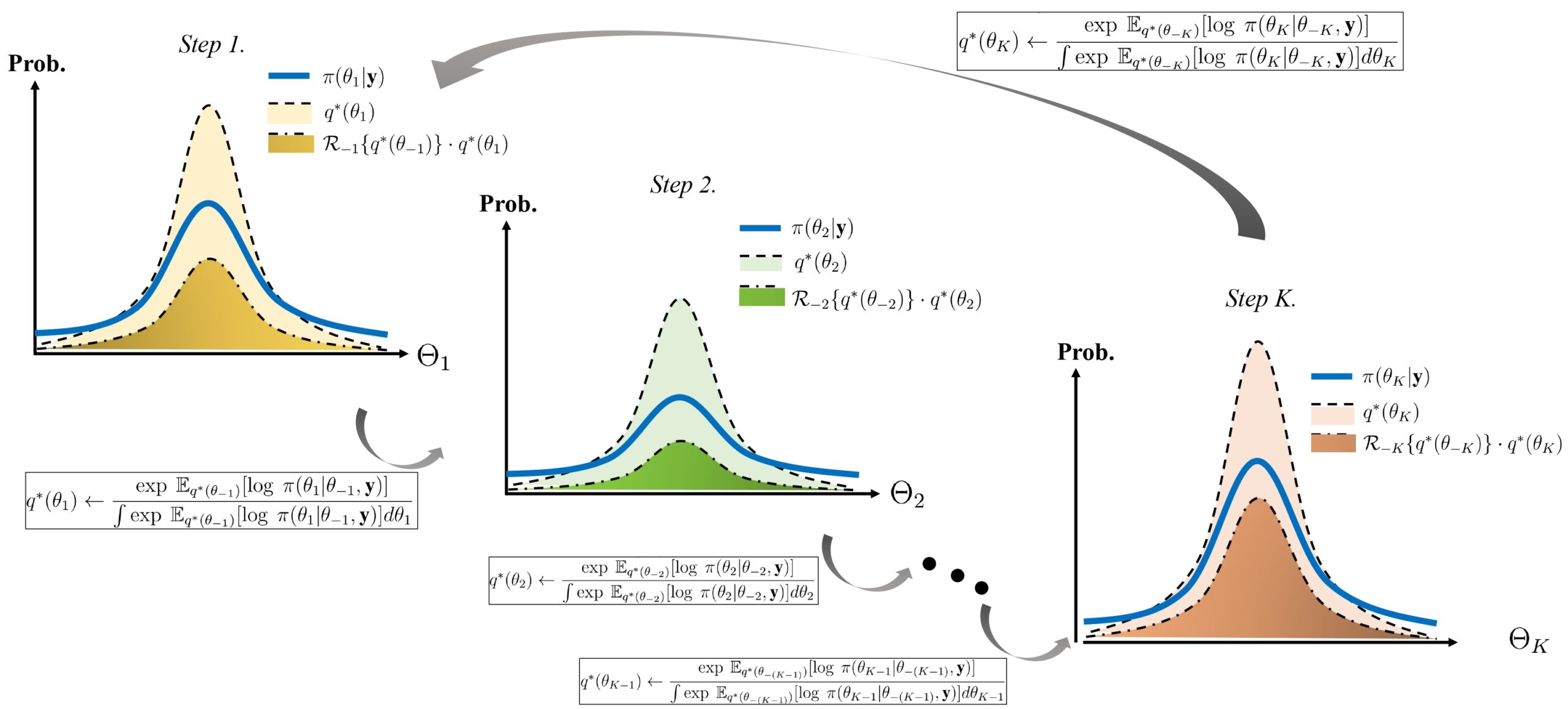

There are two approximating strategies. The first one is approximating the posterior distribution, and the second one is to approximate by sampling. Laplace approximation is the process of approximating the (posterior) distributions using a Gaussian distribution

When to use Laplace Approximation?

When we don’t know the posterior distribution shape. For example, if we know the closed form of the posterior distribution, we don’t need to find an approximation. For example, we can simply find parameters of Gaussian if we know the posterior distribution is Gaussian because it is just an optimisation problem. However, if we don’t know the posterior distribution, this means we will not have any parameter to optimise. More clearly, we can calculate the posterior distribution with the above formula, but the denominator is not tractable. Therefore, we assume some other distribution (Gaussian here). and find the parameters for that Gaussian instead of finding P(D). The trick is that, without finding P(D) which is the normalising term, we try to find the posterior with approximation granted that the function we use to approximate (Gaussian) is already normalised. So, we are left to calculate only the parameters as in the usual case (like in Bayesian Linear Regression).

Why does the integral of Bayesian Linear Regression produce Gaussian?

The reason that above formula converges to a Gaussian can be thought as summing each Riemann bin which are product of two Gaussian functions, ultimately another Gaussian. Since summation of Gaussian functions are also a Gaussian function, infinite sum converges to a new Gaussian.

But what if p(y*|x*,w) is a Gaussian with variance 0(or very small value?). The sum would also be Gaussian which is not in the real world? Is erf(x) function also a Gaussian?

NO! it is not! Because we are talking about the final sum which is from -inf to inf. If we sum all of them with first Gaussian variance 0, we will get 1 as a result which is expected since exp(h/sigma^2) where h is any number and sigma going to infinity is essentially equal to 1 independent of the drawn sample.

What is KL divergence?

Kullback-Leibler divergence is the measure of how two probability distributions are similar. The best value it can get is 0 if they are identical. This is non-negative and usually not symmetric.

For the non-negative property, check we always have greater positive values in the logarithm when q(theta) is greater.

Why is KL divergence non-symmetric?

When we calculate KL(q||p), we calculate the divergence in the x-axis where the probability distribution of q is large. On the other hand, KL(p||q) implies we are calculating the KL divergence in the x-axis where the probability distribution of p is large.

For example let p and q be uniform distributions, q is in between 0 and 1 whereas p is in between 0 and 2. In this configuration, KL(q||p) will be simply log(2). On the other hand KL(q||p) will result in (log(2)/2 + log(inf)). This is due to the inclusion of the interval from 1 to 2 which is only probable in p but not in q.

The next topic is MCMC, you can check it out here.

Ref:

https://en.wikipedia.org/wiki/Bayesian_linear_regression

Leave a Reply